In a world where borders are blurring and global interaction is part of daily life, language barriers are one of the last big hurdles to overcome. Apple is tackling that problem with a technology that feels almost magical — live translation in AirPods. Imagine walking through a market in Paris, asking a vendor a question in English, and hearing their reply in your own language instantly through your AirPods.

But how does this futuristic feature actually work? Let’s break it down in a way that is easy to understand and also good for SEO, so this article reaches more readers looking for the same answer.

What Is Live Translation in AirPods?

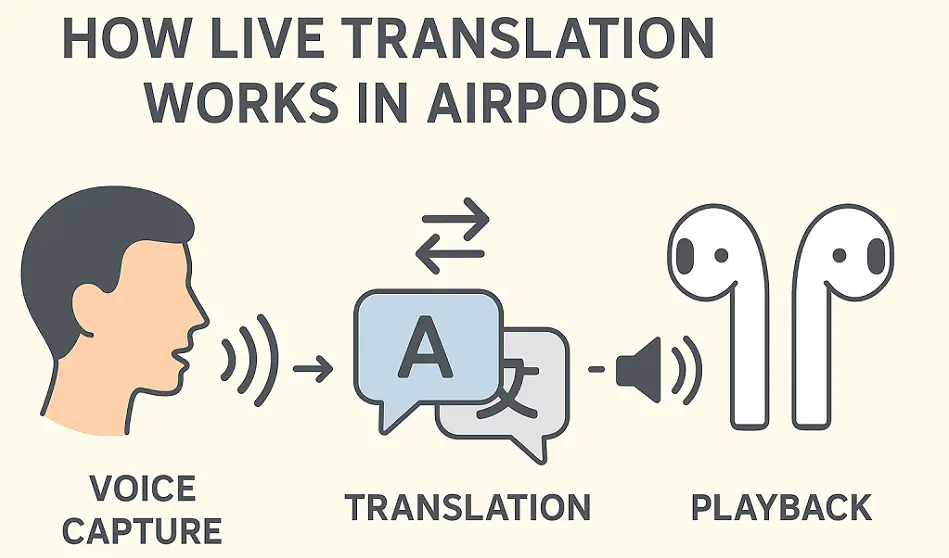

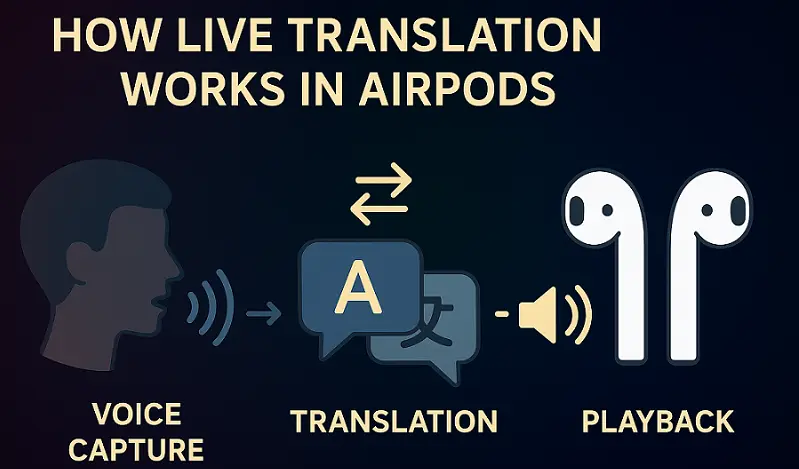

Live translation in AirPods is a feature that allows users to have real-time, two-way conversations in different languages. Using Apple’s speech recognition, machine translation, and text-to-speech engines, AirPods act as a personal interpreter.

Unlike traditional translation apps where you have to record, wait, and read, this system is fast and hands-free — you simply talk, listen, and respond naturally. This makes it perfect for travelers, international meetings, students, and anyone interacting across language barriers.

The Core Technology Behind Live Translation

Live translation is not just a single feature but a combination of multiple advanced technologies working together. Here’s the technical breakdown:

Voice Capture with Precision Microphones

AirPods come with beamforming microphones that focus on the speaker’s voice while filtering out background noise. This ensures that what’s captured is as clean as possible, giving the translation system a good starting point.

Noise cancellation and adaptive transparency also help make the voice input clearer even in noisy environments like airports or cafes.

Speech-to-Text (STT) Conversion

Once the voice is captured, the AirPods (or your iPhone/iPad) convert that audio into text using a speech recognition model. Apple uses on-device neural networks for supported languages, which means faster and more private processing.

The result is a text transcript of what the other person said, which becomes the input for the next step.

Neural Machine Translation (NMT)

This is where the magic happens. Neural Machine Translation models are trained on millions of sentences to understand grammar, idioms, and context. Instead of translating word-by-word, the model considers entire sentences, resulting in natural-sounding translations that make sense to a human listener.

Apple’s translation engine competes with big players like Google Translate but is focused on privacy and speed, often doing the processing offline.

Text-to-Speech (TTS) Conversion

After translation, the system uses a text-to-speech engine to generate a natural, human-like voice output. You hear the translated sentence in your AirPods as though someone is speaking directly to you.

This final step happens within milliseconds, making the whole process feel like a natural conversation.

On-Device vs. Cloud Processing

A big part of what makes Apple’s live translation unique is its emphasis on on-device processing. Here’s why that matters:

- Privacy: Your conversations don’t always have to leave your device.

- Speed: Local processing reduces network delays, making translations almost instant.

- Offline Use: You can download language packs to translate even without internet access — ideal for travelers.

For languages that require higher computational power or aren’t available offline, Apple temporarily uses its secure cloud servers, then streams back the translation to your device.

You May Also Like To Read

Latency and Speed: Why It Feels Instant

The biggest challenge with live translation is latency — the time between hearing the other person speak and hearing the translation.

AirPods and iPhone work together to keep this delay as short as possible by:

- Streaming partial transcriptions (you don’t have to wait for the entire sentence).

- Predictive processing, where the AI guesses the end of a sentence and refines if needed.

- Optimized Bluetooth transfer, ensuring no audio lag between your iPhone and AirPods.

The result is a delay so short that most users find it natural enough to carry a conversation without awkward pauses.

Supported Languages and Growing List

As of 2025, Apple Translate supports over 20 languages, including popular ones like English, Spanish, French, German, Chinese, Japanese, Korean, and Arabic. Each iOS update brings additional languages and better accuracy.

Expect Apple to keep expanding the list, as live translation is becoming a key feature for global communication.

Practical Use Cases for AirPods Live Translation

Live translation is more than just a cool tech demo. Here are real-life scenarios where it’s a game-changer:

- Travel: Ask for directions, order food, or negotiate prices abroad with confidence.

- Business Meetings: Talk to clients and partners in their native language without hiring interpreters.

- Education: Students can listen to lectures in foreign languages and understand instantly.

- Healthcare: Doctors can communicate with patients who speak different languages in emergencies.

- Social Interaction: Make new friends worldwide without language barriers.

Benefits of Live Translation in AirPods

- Convenience: No need to take out your phone repeatedly.

- Privacy: Conversations stay in your ears, not broadcasted on loudspeakers.

- Speed: Faster than typing into a translator app.

- Natural Conversations: Near real-time flow allows for smooth dialogue.

- Accessibility: Helps those learning a new language pick up words faster.

Limitations and Challenges

While the technology is impressive, there are still some challenges:

- Accuracy: Complex idioms, slang, or technical terms may not always translate perfectly.

- Battery Usage: Continuous microphone and processing use more battery.

- Connectivity: For unsupported offline languages, internet access is required.

- Latency in Long Sentences: Longer, complex sentences may have slightly higher delay.

Apple continues improving these areas with every iOS update and AirPods generation.

Future of Live Translation in AirPod

The future looks even more exciting:

- AI-driven context awareness: Understanding tone, emotion, and situational context for better translations.

- Automatic conversation detection: AirPods could detect when someone is speaking a different language and automatically enable translation mode.

- Integration with Vision Pro: Combine real-time subtitles with audio translation for a complete AR communication experience.

- More languages & dialects: Expansion into regional dialects and slang for even better global coverage.

- Live translation in AirPods represents one of the most powerful communication breakthroughs of our time. By combining speech recognition, neural machine translation, and natural speech synthesis, Apple has turned your AirPods into a real-time interpreter.

While there’s still room for improvement, this feature is already changing how we travel, work, and interact with the world. As Apple continues refining this technology, the dream of effortless global communication is becoming reality.

Related Articles

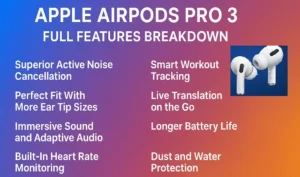

- Apple AirPods Pro 3: Full Features | ANC | Heart Rate Sensing | Lossless Adaptive Audio

- Future of Real-Time Translation in Audio Devices – What’s Next

- How is AirPods Pro 3 different from AirPods Pro 2?

- How Live Translation Works in AirPods ?

- Lossless Audio & AirPods Pro 3 — Complete Guide – Tech Myths & How-to’s

- Tips to Extend AirPods Battery Life

- What Does IP57 Mean in AirPods Pro 3?

- What is Adaptive Audio in AirPods Pro 3 ? – Everything You Need to Know

Leave a Reply